by garyg

12. July 2011 09:34

I responded to a post recently on one of the MSDN TFS forums regarding a question from a user trying to upgrade from Team Foundation Server 2008 to Team Foundation Server 2010. His question was related to trying to do a delta DB copy after an initial successful upgrade to try and speed the process (it can take 8 hours or more for the full upgrade). (see tfs 2010 re-upgrade)

There is no supported way to achieve this, but it also made me recall another very important tip: Shut down ALL TFS related services and app pools before making your TFS 2008 DB backups to move to your new server (I’m assuming you are migrating to different hardware in this case).

This ensures everything is in sync for the backup. If you don’t, there is a chance a record in one of the DB’s could be updated before you have a chance to complete the backup. I’ve seen the results of this first hand and it was both difficult to diagnose and expensive. Your upgrade will proceed normally and you wont know there is a problem until you try and create a new team project, or create a new work item.

by garyg

18. April 2011 11:33

It would be great if every self-organizing team just jumped right in and did just that, but some do need a little help. Most new groups will traverse through the standard storming – forming – norming stages, and its mainly during the first two you’ll need to help the most. The biggest challenge for any of us who have lead traditional teams face in facilitating a self-organizing team learning how to facilitate without dropping into traditional management behaviors.

My first realization to this came one morning at a Daily Scrum when listening to the team members report their status to one another. It was obvious to everyone in the room that there was an extreme imbalance in the workload and a few members User Stories were falling behind. They were struggling. At the end of the Scrum I expected one or more of them to offer assistance to the struggling members. I was wrong. I don’t know why, but I expected these formally very independent people to suddenly step up and help one another out simply because the rules and principles of Scrum had been laid out for them. The people hadn't changed because the process did.

Coming to the rapid conclusion that they just didn’t know how to start helping one another, and fighting the urge to drop into delegating PM mode, I stayed in the facilitating Scrum Master role, bringing up the Burn Down and Capacity charts instead and asked questions. Very pointed questions on what they thought the numbers meant

Leading the team to correct realization on their own rather than telling them what to do, helped them take the first leap. It seems like a simple step, but for this group it was the turning point for coming to grips with a key responsibility of a self-organizing team.

So what specifically can you do to help your Agile team to the “right” conclusions? Here are a few tips I’ve put together from my experiences:

- Highlight issues by bringing them up for discussion. Encourage team members to vocalize the solution on their own rather than you pointing it out.

- Get impediments out of the way. Make sure you aren't one of them. Facilitate communications but avoid being a go-between if at all possible.

- You are not the Admin, do not become one. Insist that all artifacts be created by the Team. It encourages ownership and responsibility.

- When everything is right, it will seem like you do nothing at all. Once the Team gets some experience and success behind them you should do very little other than truly facilitating .

by garyg

7. November 2010 23:36

So we are in the final go/no-go hour for a product release, and its not looking good. My team had been charged with testing the product for last 3 day. The development team was busy playing wack-a-mole with several P1 and P2 bugs that continued to regress. Our QA team had no involvement at the beginning of this cycle, nor visibility to any hard requirements (I know, not a good start). A particularly nasty P1 that appears to be in the framework of the product, is on its 4th try through the regression testing cycle. This is one that the developer was sure he had it “this” time. My confidence of course was not there, and I recommended this release be pulled.

The client of course was not happy and called an emergency meeting to get a handle on what was happening. We were now going over the exact contents of what was supposed to be in this latest release, feature by feature. Seeing the impending revelation creeping upon him the developer finally revealed there may be something “a little extra” in this code. Something completely off the requirements list, project plan, and of course did not go through the fairly rigorous code review this client normally does. We were unwitting victims of a classic Gold Plating gone wrong situation and nearly done in by the Midas Touch of unchecked development without matching requirements. No malice of intent but the results were the same.

How this situation was resolved isn’t important, but how we could have prevented it is. A simple, solid, Requirements Traceability Matrix combined with some training on early recognition of Gold Plating signs was on my list of recommendations. I’ve often seen the costs of Gold Plating represented by actual extra work and schedule overruns. This was the clearest case I’ve seen to date were it directly caused a quality issue of this magnitude. A good lesson for us all.

by GaryG

20. July 2010 05:33

I know I usually write about Project Management related topics so I ask my regular readers to please bear with me. This one was a real pain to solve so I wanted to share it since TFS 2010 is fairly new and and error its giving out doesn’t really help. Recently while working with an enterprise client in a TFS2008 to TFS2010 migration (a real pain in itself) we came across an error in setting up the Team Build Service. The topology here put the TFS application tier on one server and the Team Build Service on its own machine (a Windows 2008 Server), and both the Controller and Agents were on this machine.

The problem we saw was that the the controller and agents couldn't connect (and of course all the team builds failed). The error was:

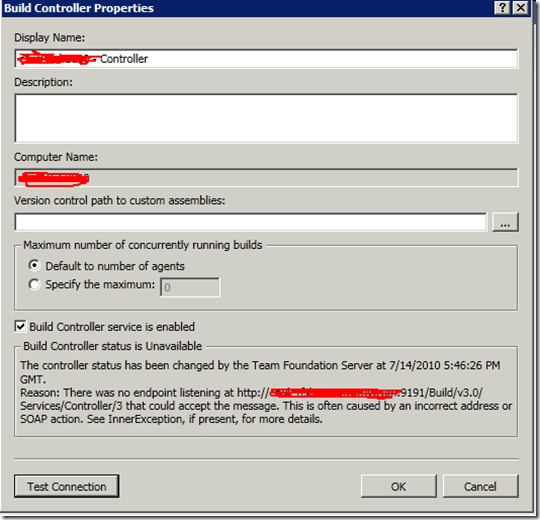

"There was no endpoint listening at http://somemachine.company.com/Build/v3.0/Services/Contoller3 that could accept the message. This is often caused by an incorrect address or SOAP action. See InnerException, if present, for more details."

The error was displayed in the properties dialog for both the Controller and Agent as in the below screenshot:

After a lot of head banging, setting up traces, and a reinstall I realized that for some reason the configuration wizard put the FDQN (Fully Qualified Domain Name) rather than the machine name. Having debugged an issue on another products Web Service I decided to change it to use just the Machine Name and it instantly connected both the Controller and Agents.

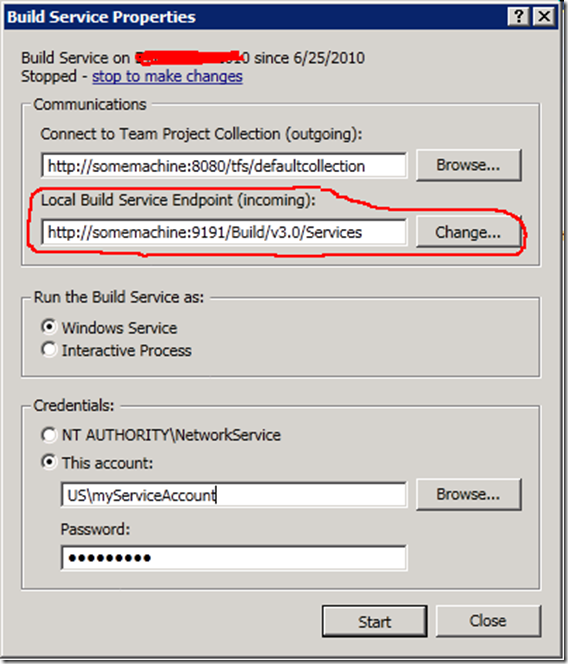

This fix is simple thankfully. Change the local build service endpoint to NOT use a FQDN but just the machine name, restart the Build Controller and Agents.

To do this just get into the TFS Administration Console on the Build Server and click the Build Configuration node. From here click the Properties on the Build Service and you will get the following window:

The “Local Build Service Endpoint (incoming)” will be grayed out until you click the “stop to make changes” link. Click the link to stop the service then click the Change button to change just the FQDN to the machine name. From here just click the Start button and your Controller and Agents should be talking fine. It may take a minute once you restart the Build Service for everything to reestablish communication. I hope this helps someone on another TFS 2010 deployment.